Optimize Your AWS Data Lake with Streamsets Data Pipelines and ChaosSearch

Many enterprises face significant challenges when it comes to building data pipelines in AWS, particularly around data ingestion. As data from diverse sources continues to grow exponentially, managing and processing it efficiently in AWS is critical. Without these capabilities, it’s harder to analyze and get any meaning from your data. This article explores how cloud platforms like StreamSets and ChaosSearch can optimize your AWS data ingestion pipeline processes, offering a streamlined solution to handle both structured and unstructured data for efficient analytics.

The Data Ingestion Challenge in AWS

As enterprises collect data from a number of places — such as applications, devices, and cloud services — the complexity of managing this data increases. Companies often struggle with setting up resilient AWS ingestion pipelines that can process streaming, batch, and change data capture (CDC) workloads. The challenge is not just about getting data into AWS but also maintaining data security, ensuring access control, and making sense of the data before it can provide value.

AWS offers many services to address this challenge, but managing a robust AWS data pipeline architecture for real-time and batch processing requires the right tools. The sheer volume and variety of data — from log files to large CSV tables — create further complications. To unlock the value within this data, enterprises need scalable solutions for streaming analytics in AWS, detecting data drift, and enriching raw logs with additional context.

Let’s dive deeper into the challenges associated with AWS data pipeline architectures for real-time and batch processing, and why the right tools are essential for handling these issues.

The Complexity of AWS Data Pipeline Architectures

AWS data pipeline architectures serve as the backbone for integrating, transforming, and analyzing data across the cloud. AWS provides a rich set of tools, such as AWS Glue, Amazon Kinesis, AWS Data Pipeline, and Amazon EMR, each suited for different use cases. Even so, orchestrating these services into a cohesive and efficient data pipeline can come with significant challenges.

1. Real-Time vs. Batch Processing

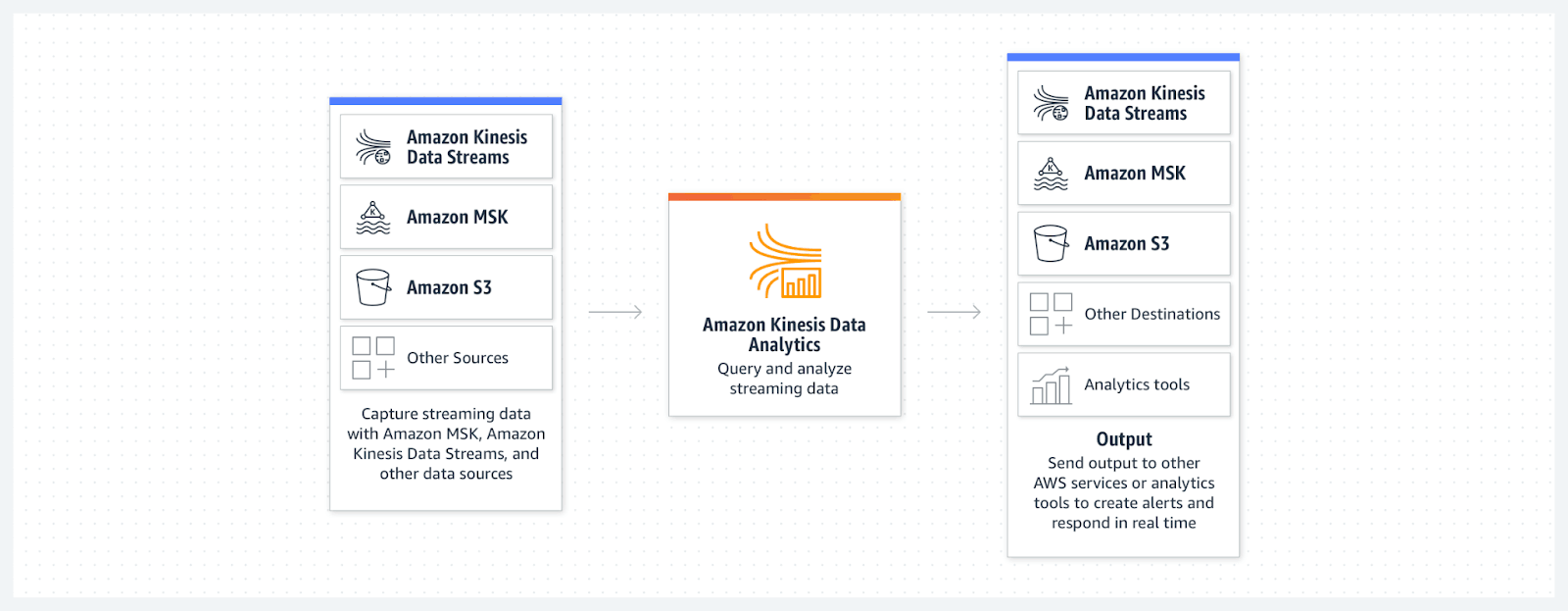

Real-time processing involves streaming data as it's generated, making it ideal for applications such as fraud detection, live user behavior analysis, and instant operational insights. Real-time processing requires tools like Amazon Kinesis to capture and process the stream of data continuously.

On the other hand, batch processing processes data in intervals, typically handling larger sets of data over time. This is useful for use cases like ETL jobs, nightly data warehouse updates, or running large-scale analytics reports using tools such as AWS Glue or Amazon EMR.

Managing both types of processing simultaneously within a single AWS ingestion pipeline requires careful architectural design to ensure scalability and efficiency. Another best practice for streaming analytics and batch processing alike is to store all of your data in Amazon S3 object storage, and leverage tools like ChaosSearch and StreamSets to analyze data in any format. We’ll talk more about that later.

Streaming analytics architecture using Amazon Kinesis

2. Handling the Volume and Variety of Data

Enterprises often deal with diverse data types, from unstructured logs to semi-structured JSON files and large CSV tables. Each data format has its own challenges in terms of processing, storage, and querying:

- Log files generated by systems, applications, or cloud services are often unstructured, making it difficult to search, analyze, or derive insights without proper indexing and transformation.

- Semi-structured data like JSON requires schema interpretation, which can change over time, leading to data drift — the challenge where data structures evolve unexpectedly. This could result in pipeline failures if not properly managed.

- Large CSV tables represent structured data that needs to be efficiently queried and processed. However, these tables can grow quickly and become difficult to handle without scalable storage and processing options. Querying large datasets on AWS can quickly get costly and time consuming to manage.

3. Scalability and Performance

As enterprises continue to capture more data, scalability becomes critical. Without the right tools, AWS data pipelines can easily become bottlenecked, reducing performance and increasing costs. The sheer volume of data can overwhelm traditional ETL processes, slowing down data availability and hampering decision-making. This can be particularly challenging for AWS serverless log management.

Tools like ChaosSearch are highly useful for handling large datasets and enabling distributed processing, while StreamSets can build pipelines that adapt to data growth by dynamically scaling as more data flows in. Using ChaosSearch can also dramatically reduce AWS log costs (see even more AWS logging tips here).

The Difference Processing Data on AWS with Advanced Tools

To address the specific challenges in AWS data pipeline architectures, advanced tools like StreamSets and ChaosSearch introduce features to help enterprises maximize efficiency and gain deeper insights:

- Data Drift Detection: One of the biggest issues with growing data architectures is data drift, where unexpected changes to the data format or structure disrupt the pipeline. StreamSets solves this with smart data pipelines that automatically detect and adjust to these changes. Without such a feature, data pipelines often break when encountering new data formats, leading to delays and increased maintenance efforts.

- Data Enrichment: Simply ingesting raw data isn’t enough to derive actionable insights. Data enrichment provides additional context to raw logs, such as adding metadata, mapping IP addresses to geographical locations, or transforming machine IDs into human-readable labels. This ensures that the data flowing through the pipeline is more useful for downstream analysis and decision-making.

- Schema-on-Read: Even if you aren’t able to enrich your data, ChaosSearch uses a schema-on-read approach. This allows you to query data in its native format without needing to apply a rigid schema during ingestion. This flexibility makes handling diverse data formats (structured, semi-structured, and unstructured) much more manageable and allows enterprises to analyze their data as soon as it lands in Amazon S3, without the need for complex transformations.

Let’s explore both StreamSets and ChaosSearch capabilities in more detail.

How to Get Data Flowing with the StreamSets Data Pipeline

StreamSets, a leading DataOps platform acquired by IBM in 2024, brings innovation to data ingestion pipelines by providing smart data pipelines capable of automatically detecting and adjusting to data drift. With its support for multiple data formats such as JSON, Parquet, and CSV, StreamSets simplifies the challenge of integrating diverse data sources into a unified data lake on AWS.

Key Benefits of Using the StreamSets AWS Integration:

- Data Enrichment: With StreamSets, you can enrich data, transforming raw inputs into processed data that offers deeper insights. For example, a log file with IP addresses can be enriched with geolocation data, making it easier to analyze trends by location.

- Data Drift Detection: StreamSets’ smart pipelines can detect and adjust to changes in data structure, ensuring pipelines perform consistently over time without requiring constant maintenance.

- Support for Streaming, Batch, and CDC: StreamSets enables building pipelines for real-time data streaming, batch processing, and CDC, making it a versatile choice for handling various AWS data pipeline architectures.

- Data Security and Access Control: With comprehensive support for access control, StreamSets ensures secure handling of data across its pipelines, from ingestion to storage.

Enhancing Your AWS Data Lake Architecture with ChaosSearch

Once your data reaches Amazon S3, ChaosSearch provides the next level of transformation and analytics. By indexing data directly in Amazon S3, ChaosSearch eliminates the need for data movement and expensive ETL processes, making your data lake architecture more efficient and cost-effective. The ChaosSearch platform integrates seamlessly with StreamSets, offering scalable multi-model data access and enabling businesses to extract insights from their data lake without moving or duplicating it.

How ChaosSearch Benefits AWS Ingestion Pipelines:

- No Data Movement: Data is indexed directly in Amazon S3, reducing costs and avoiding data movement between storage and analytics layers.

- Schema-on-Read: This allows businesses to analyze their data as it is, supporting various formats and making the analysis process more dynamic.

- Multi-Model Access: Users can run SQL queries, full-text searches, and machine learning queries on their data without restructuring or ETL processes.

A Comprehensive Solution for Maintaining Data and Driving Growth

Combining the strengths of StreamSets and ChaosSearch gives teams an end-to-end solution for building and maintaining resilient data ingestion pipelines in AWS. With real-time data collection, enrichment, and transformation, businesses can make data-driven decisions faster and more efficiently.

- Resilient Data Pipelines: StreamSets supports building pipelines that perform consistently across a wide range of data sources, ensuring that your data flows seamlessly into your AWS data lake.

- Centralized Control: StreamSets’ control hub offers centralized management of all your data pipelines, ensuring operational transparency and pipeline health.

- Scalability: Both StreamSets and ChaosSearch are designed to scale, handling large numbers of pipelines and ensuring that processed data is ready for analysis at any scale.

By integrating these solutions, teams can overcome the challenges of AWS data pipeline architectures, handling both structured and unstructured data with ease. As a result, they can get actionable insights from their data lakes securely — and in a way that scales with the modern cloud environment.

Ready to unlock the full potential of your AWS data lake?