Inside the Modern Data Analytics Stack

Data analytics is nothing new. For decades, businesses have been deploying a "stack" of data analytics tools to collect, transform, evaluate and report on data.

However, as data has grown larger in volume, and as the ability to analyze data quickly and accurately has become ever-more important to business success, the data analytics stacks that businesses depend on have evolved significantly.

So, if you haven't taken a look at data analytics stacks recently, they're worth revisiting. As this blog explains, the modern data analytics stack looks quite different from the analytics stacks of old.

What is a Data Analytics Stack?

A data analytics stack is the set of software tools and technologies used by enterprise DevOps teams, data engineers, and business analysts to collect, aggregate, organize, analyze and report on data.

Data analytics workflow. Organizations need a modern data analytics stack that supports every stage of the analytics workflow, from data collection and aggregation to analysis and reporting.

Data analytics processes vary widely depending on which types of data an organization is analyzing and which types of insights it seeks from the data, so there’s no singular set of tools or one-size-fits-all solution for deploying a functional data analytics stack.

In general, however, a typical technology stack for big data analytics includes tools to perform the following functions:

- Data Collection and Aggregation: Organizations deploy data collection and aggregation tools to collect and aggregate large amounts of raw data from a variety of sources, including user-facing applications, cloud infrastructure and services, and transactional systems. Centralizing data in a single location gives enterprises the ability to gain insights by analyzing and correlating data from multiple sources.

- Data Transformation and Processing: Aggregated data must be cleaned, normalized, and/or transformed before it can be analyzed to extract high-quality insights. Organizations use data transformation tools to process data and prepare it for analysis. Transforming data may involve applying schema, converting data from diverse sources into a common structure or format, or migrating data from one type of database to another. Tools related to data transformation and processing include:

- Data Pipelines: A data pipeline is a system used to transmit and process data between a source and a destination. Data pipelines collect raw data from a source, transform the data into a desired format, and ship the data to a downstream data storage repository.

- Extract-Transform-Load (ETL) Tools: Some data analytics stacks use ETL tools that help with data integration and management by automating the process of extracting raw data from multiple sources, transforming the data into a desired source format, and loading the data into a data warehouse.

- Extract-Load-Transform (ELT) Tools: ELT tools are similar to ETL tools, but they allow organizations to extract the raw data, load it into a data warehouse, and apply transformations at query time.

- Data Storage: Data that has been collected and aggregated from IT and transactional systems may be stored in a data warehouse, a data lake, a cloud-based object storage service like AWS S3 or a conventional database.

- Data Quality Control: Data quality tools may be used as part of the analytics process to identify and correct inaccuracies, redundancies, missing data, or other flaws inside data sets that could hinder analytics efforts or lead to poor results.

- Data Analysis: When data is ready to be analyzed, data analytics tools interpret it by running queries against the data and displaying the results.

- Data Visualization and Reporting: Enterprise DevOps teams, security teams, and business intelligence analysts use data visualization and reporting tools to share the results of analytics operations and communicate insights that support data-driven decision-making.

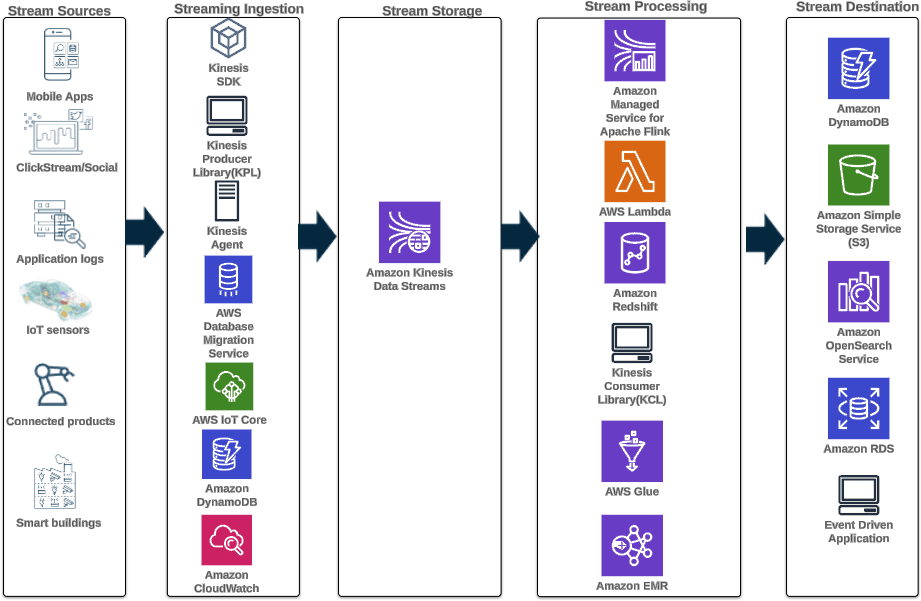

A streaming analytics technology stack built with AWS services. When data arrives at the streaming destination, customers can query the data using their own analytics or business intelligence tools.

Watch the Webinar: Rethinking Data Analytics Optimization

How the Data Analytics Stack Has Evolved

The types of data operations described above have long been important to data analytics. However, the types of tools used to perform those functions, and the way those tools are integrated to form a data analytics stack, have changed for several key reasons.

Migration to the cloud

94 percent of enterprises today use the cloud. That means that a lot of the data that businesses need to analyze lives in the cloud by default. Having data analytics tools that can analyze that as readily as possible – ideally, without having to move it from its original source – is a key aspect of the modern data analytics stack.

That's especially true for businesses that take advantage of cloud-based data warehousing services. If you can perform analytics operations on data inside a data warehouse without having to move or transform it first, you'll get faster, more actionable results.

Diverse Analytics Use Cases

Product development teams use product data analytics to understand how customers are engaging with their products and prioritize features or improvements that enhance the customer experience. Game developers are using gaming analytics to maximize player engagement and monetization. Enterprise DevOps and security teams are using streaming analytics to analyze logs from cloud-based applications on AWS.

Increasing diversity of analytics use cases means that organizations need more sophisticated tools and strategies to efficiently analyze data at scale.

Cost control

As the volume of data that businesses generate has grown, so has the challenge of ensuring that they can ingest, analyze and store all of the data cost-effectively. One way to control costs is to deploy a data analytics stack that minimizes the amount of data movement that needs to take place within the analytics pipeline. In this way, businesses can reduce data egress fees, which they typically have to pay when they move data from one environment into another.

Administrative complexity

While open source data analytics tools (like ElasticSearch) are powerful, deploying and managing them requires significant time and money. That's one reason why businesses today increasingly opt for fully managed, easy-to-deploy data analytics tools, which reduce their total cost of ownership and administrative burden.

Data security

Data security and compliance requirements are tighter than ever. That means that maintaining the security of the data analytics stack is a key priority.

So is ensuring that data analytics can deliver effective insights for security operations teams.

Who Needs a Data Stack?

Not every business needs a full suite of data collection, transformation, analytics and reporting tools. If you only need to perform one specific type of data analytics operation (such as analyzing cloudwatch log data or performing security analytics), an analytics tool that is purpose-built for that use case may suffice.

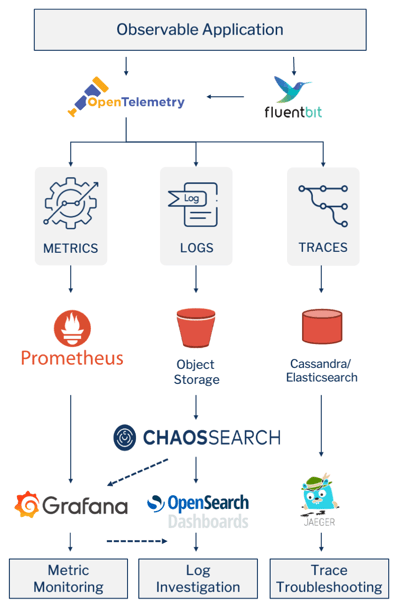

This modern data analytics stack combines ChaosSearch with open-source data collection tools (OpenTelemetry, fluentbit), open-source analytics tools (Grafana, Jaeger, OpenSearch Dashboards), and cloud services (object storage, cassandra/elasticsearch) into a modular solution for digital business observability.

But for businesses with multiple types of data to analyze, and multiple analytics use cases to support, data analytics stacks provide the foundation for achieving actionable, data-based insights over the long term. That's especially true if your data stack is flexible enough to adapt and scale as your business needs change.

How to Build a Modern Data Analytics Stack

Given the many considerations at play in creating a cloud-friendly, cost-effective, easy-to-maintain and secure data analytics stack, building a stack suited to your business is no simple feat.

To simplify the process and optimize your data analytics stack, it helps to prioritize data storage and analytics solutions that are agnostic, meaning they can work with any type of data and support any analytics use case. Be sure, too, to think about the total cost of ownership, rather than looking just at the direct cost of your data analytics tools. And make sure your stack can deliver not just the insights you need today, but also those that your business may require in the future.

Ready to learn more?

Watch our free on-demand webinar Data Architecture Best Practices for Advanced Analytics to learn more about implementing a modern data analytics stack that can support advanced analytics applications.