Eliminate Data Transfer Fees from Your AWS Log Costs

As businesses generate, capture, and seek to analyze more data than ever before, they often find themselves limited by high data storage costs, expensive data processing fees, and high management overhead. For organizations who wish to expand their log analytics programs and become more data-driven, maximizing cost efficiency has become a critical operational objective.

While competing solutions often necessitate costly data movement and duplication, the ChaosSearch data lake platform was engineered to minimize data storage costs and eliminate the need for data movement, resulting in a cost-optimized approach to data analytics at scale.

In this week’s blog, we’re taking a closer look at how ChaosSearch leverages AWS infrastructure and services in a smart way to provide a cost effective log analytics solution avoiding unnecessary data fees.

Data Movement Makes Enterprise Analytics Expensive

Organizations are moving application workloads into the cloud and generating an increased volume of log data from cloud-deployed applications and services. Public cloud storage services like Amazon S3 provide a convenient and cost-effective solution for storing data prior to analysis - but what happens when it’s time to analyze the data?

Read: Leveraging Amazon S3 Cloud Object Storage for Analytics

Log analytics solutions can be offered in different models, with self-hosting and SaaS recognized as the most common/popular. No matter the deployment model, one important step is to define how to send logs from the Virtual Private Networks (VPCs) hosting your application, to your log analytics solution in a secure, scalable, and cost-effective way. Although logs usually do not contain Personal Identifiable Information (PII), they still carry sensitive information and thus must be transferred in a secure manner.

For self-hosted and SaaS solutions running on AWS, VPC Peering and PrivateLink are the two most common options to connect the VPCs:

-

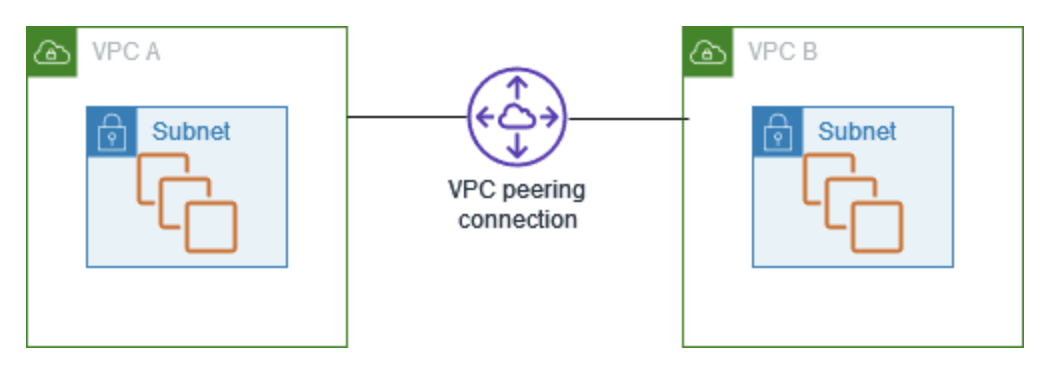

VPC Peering is the first option to establish a bidirectional data transfer connection between two VPCs, routed over AWS's internal networks. VPC peering allows customers to route network traffic using each VPC’s private IP address, as if they were in the same network. Because it's bidirectional, it's important to apply the appropriate security controls and rules to avoid unauthorized access to your centralized logging VPC from any of the VPCs hosting the applications and services.

Amazon Virtual Private Cloud (VPC) Peering -

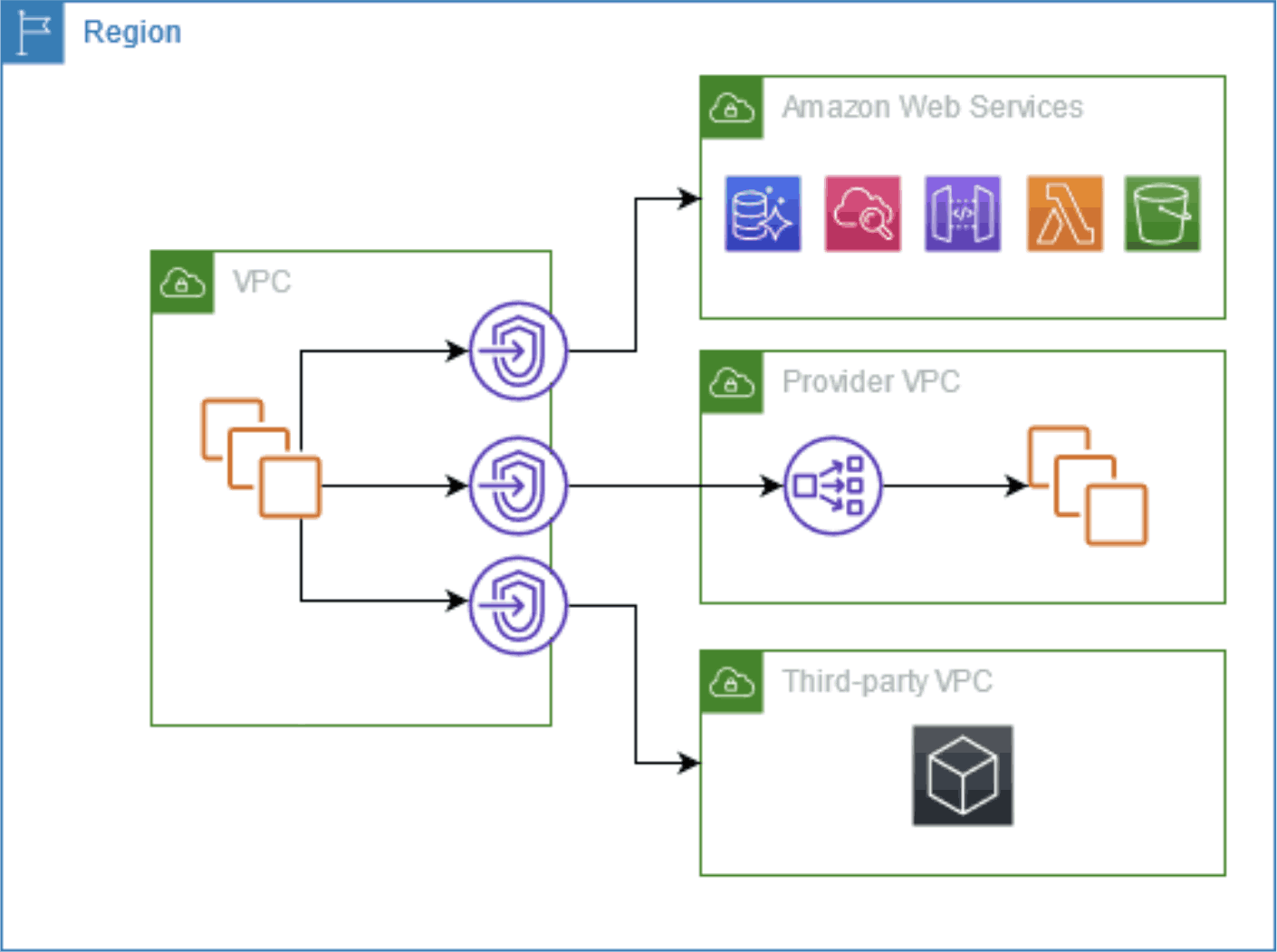

AWS PrivateLink is another option available for establishing secure data transfer capabilities between different VPCs, without routing traffic through the public internet. It provides a one-way connection from the VPCs hosting the application and services to the VPC hosting the log analytics solution (self-hosted or SaaS).

AWS PrivateLink

But just like VPC peering, AWS PrivateLink can be costly. Customers incur costs for this type of connection on an hourly basis, plus additional costs based on the volume of data transferred over the connection. In both cases, the need to transfer log data out of your network and send it to an external VPC for analytics results in high data egress fees that reduce the cost efficiency of your log analytics initiative.

A secondary challenge that emerges from this architecture is duplicate data storage, along with its associated costs. In addition to paying storage costs at log analytics solution, in the form of infrastructure cost (block storage/disks) for self-hosted solutions, or packaged as retention charges by SaaS solutions, many customers also keep a copy of the raw logs on cost-effective storage (like Amazon S3), in a way that they can not only keep a backup and use for re-indexing or recovery tasks, but also to keep their log data beyond the retention window offered by the SaaS provider for incident investigations, compliance, among other needs.

When accounting for initial data storage costs, data processing fees, and the cost of storing duplicate data, the cost of log analytics often seems to scale up faster than the benefits.

ChaosSearch Eliminates Data Movement and Enables Cost-Effective Analytics

At ChaosSearch, we’re pioneers of the No Data Movement Movement.

Recognizing the high costs of data processing fees at scale and the inefficiency of duplicate data storage, we designed the ChaosSearch data lake platform in a way that eliminates the need for data movement and duplicate storage in the log analytics process flow.

ChaosSearch customers aggregate and store log data directly in their own Amazon S3, but the difference is that ChaosSearch can read, index, and analyze data directly in those Amazon S3 buckets with no data movement or duplication.

How do we do it?

By applying a revolutionary technology created from the ground-up to leverage cloud storage as the key foundation for a simple, scalable, and cost efficient analytic data lake engine. Cloud object storage, like Amazon S3 and Google Cloud Storage (GCS), offers near-infinite scalability, is secure, global, and designed for (11 9’s) durability. It's the most common destination for cloud-native application logs and the default storage for cloud infrastructure and services logs, so an ideal foundational platform for building a next-generation big data analytics solution.

What about data processing fees?

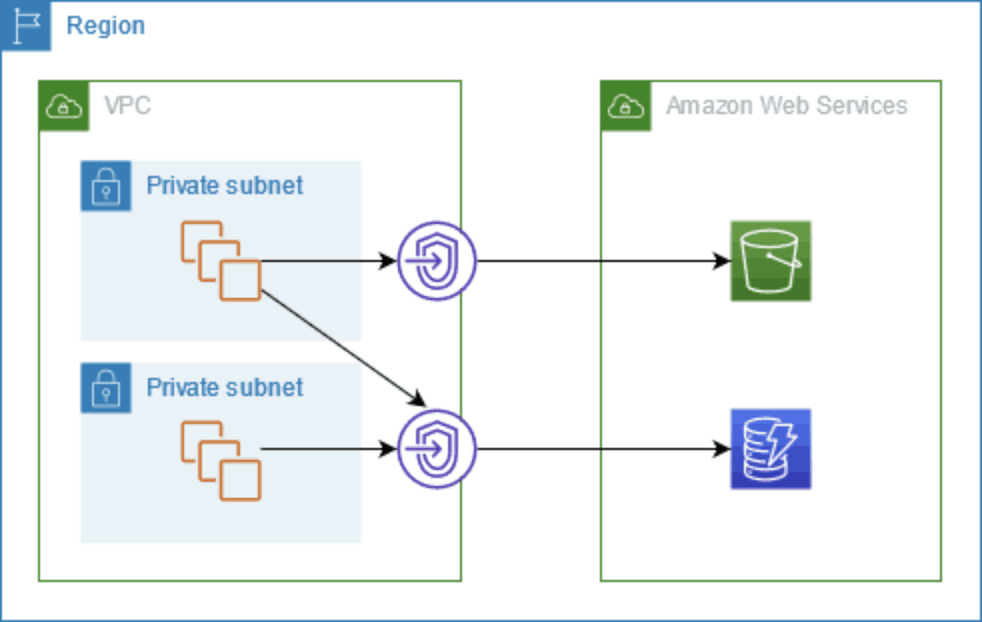

Cloud provider infrastructure and service logs (ex. CloudTrail, Elastic Load Balancing logs, Cloudfront logs) are sent by default to cloud storage, and usually free of charge. For application logs or logs collected using agents or log collectors (ex. Fluentd, Logstash, Cribl) on AWS, there is another option to send logs to Amazon S3 in a secure and private manner - VPC Gateway Endpoint.

VPC Gateway endpoints routes traffic securely from your VPC to Amazon S3 (or DynamoDB), and does not charge hourly or data processing fees, as noted at the bottom of the VPC pricing page:

Note: To avoid the NAT Gateway Data Processing charge in this example, you could setup a Gateway Type VPC endpoint and route the traffic to/from S3 through the VPC endpoint instead of going through the NAT Gateway. There is no data processing or hourly charges for using Gateway Type VPC endpoints. For details on how to use VPC endpoints, please visit VPC Endpoints Documentation.

This means customers can send their logs directly to Amazon S3 free of charges. In the same way, ChaosSearch also takes advantage of VPC Gateway Endpoints to accesses Amazon S3 without incurring these charges, reducing the overall AWS log costs by eliminating data processing fees.

VPC Gateway Endpoints also offer some security advantages: customers can use VPC security groups to manage access to endpoints, and all network traffic stays securely within the AWS network without ever traversing the public Internet where it could be made vulnerable to attack.

Read: 10 Essential Cloud DevOps Tools for AWS

Measuring the Financial Impact of Reducing AWS Log Costs

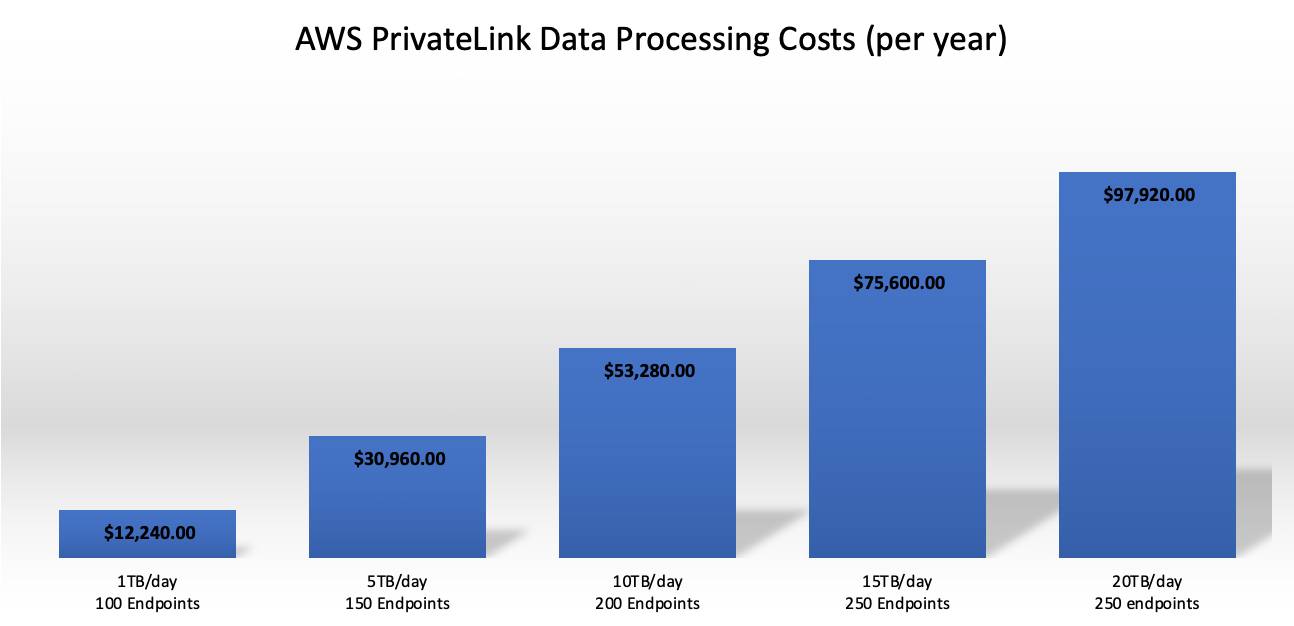

Running a quick exercise to estimate how much you can we save by avoiding PrivateLink's charges, we estimate that a customer with 100 VPC Interface Endpoints (one for each subnet inside a VPC) and 1TB of logs generated each day could be paying a total of 12,240 USD just to send these logs securely to their log analytics solution:

100 x 24hrs x 30 days x 0.01 USD(per hour) = 720 USD (Hourly cost per VPC Interface Endpoint)

1000 GB x 0.01 USD(per GB) = 300 USD (VPC Endpoint data processing fee)

Total cost: 1,020 USD per month / 12,240 USD per year

Check below an estimation on how these costs grow over time as you scale the log ingestion volume and amount of endpoints:

Even using modest numbers for this estimation, this cost alone can represent up to 5% of the total cost of your AWS logs, which can be a significant target for cost-savings exercises.

Achieve Cost-Efficient Data Analytics with ChaosSearch + AWS

Alternative log analytics solutions require data movement that results in high data processing fees and duplicate storage costs. However, the innovative architecture of ChaosSearch is eliminating data movement and empowering our customers with truly cost-efficient data analytics. Start a free trial using the link below and try it with your own data to verify the cost savings!

Additional Resources

Read the Blog: Troubleshooting Cloud Services and Infrastructure with Log Analytics

Listen to the Podcast: Making the World's AWS Bills Less Daunting

Check out the Whitepaper: Ultimate Guide to Log Analytics: 5 Criteria to Evaluate Tools